Research and add findings to Notion

While apps like ChatGPT (Deep Research) and Perplexity can research for you, you still have to manually copy and paste the research into your documents. Let's build an AI agent that can research and add its findings to Notion for us.

Scenario

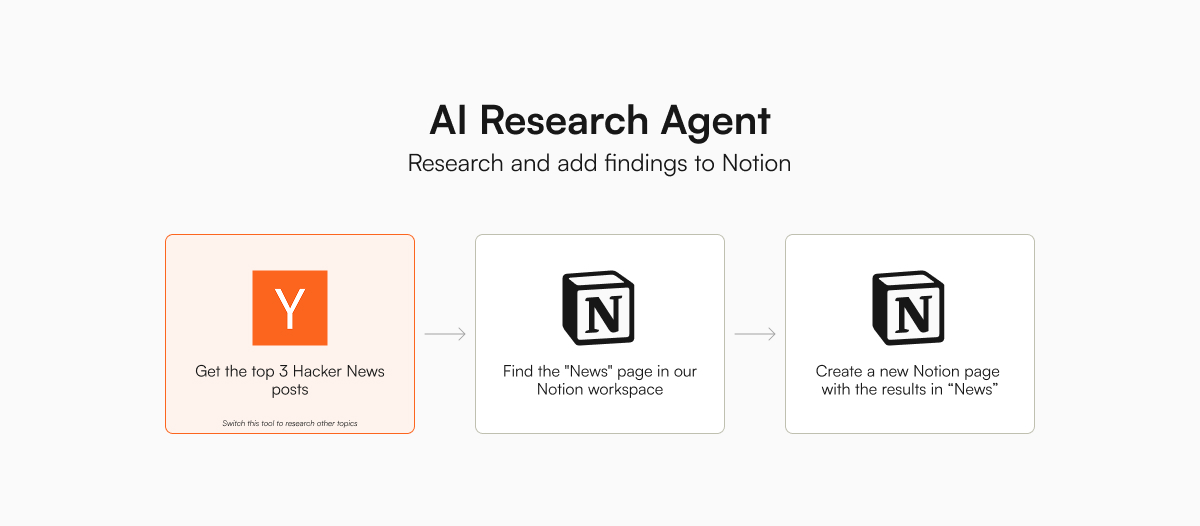

For this demo, we will show how to build an AI agent that can get the top three Hacker News posts and add them to a Notion page named "News".

Specifically, our AI agent will:

- Get the top 3 Hacker News posts

- Find the "News" page in our Notion workspace

- In the "News" page, create a new page with the specified title and the research results

To complete this task, our AI agent is equipped with tools to:

Even though we are researching Hacker News in this example, you can use a web search or Reddit search tool to find the news you are interested in.

Setup

To get started, we first set the following environment variables in our .env file:

NOTION_INTEGRATION_SECRET: The integration secret of the Notion account you want to use (see below)<COMPANY>_API_KEY: The API key of the model you want to use- The Hacker News tools do not require an API key.

Notion integration setup

We will need to set up a Notion integration so that our AI agent can access our Notion workspace securely. To do that and to get the Notion integration secret:

- Go to https://www.notion.so/profile/integrations/

- Click on "New integration"

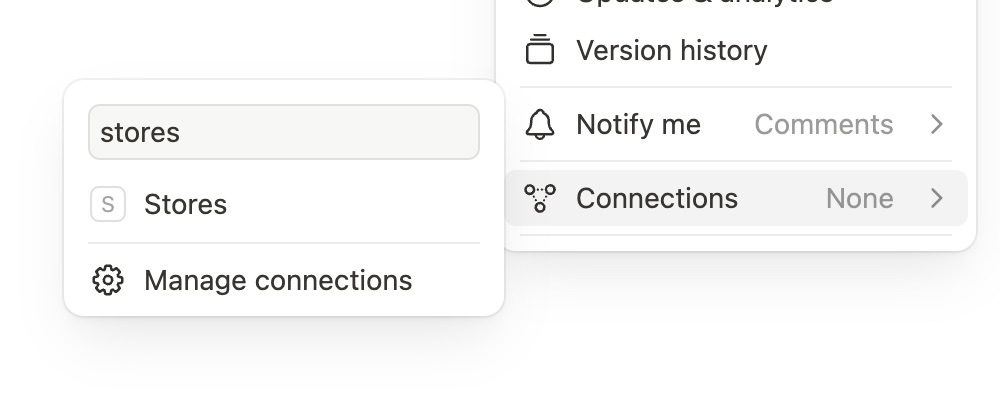

- Add your integration name (e.g. "Stores" or "AI Assistant")

- Select the workspace for this integration

- Select "Internal" for the integration type (This keeps things simple, unless you want to share your integration with others)

- Click on "Save"

- Copy the "Internal Integration Secret" and save it as an environment variable

With an internal integration, we have to explicitly grant access to specific pages. I find this helpful because I can restrict the pages that the AI agent can access.

- Go to the page you want to grant access to (e.g. a "News" page)

- Click on the three dots at the top-right corner

- Click on "Connections" (usually the last item in the menu)

- Click on your integration to enable access to this page and its children

The AI agent will then be able to access only this page and its children.

Scripts

Some APIs and frameworks (e.g. Gemini, LangGraph, and LlamaIndex agent) automatically execute tool calls, which make the code much simpler. For the rest, we will need to add a while loop so that the agent will keep working on the next step until the task is completed.

From my experiments, Gemini 2.0 Flash prefers to ask questions instead of using the tools to find the information it needs. You can either specify in the system prompt to use tools or try Gemini 2.5 Pro, which is much better at using tools.

import os

import anthropic

from dotenv import load_dotenv

import stores

# Load environment variables

load_dotenv()

# Load tools and set the required environment variables

index = stores.Index(

["silanthro/notion", "silanthro/hackernews"],

env_var={

"silanthro/notion": {

"NOTION_INTEGRATION_SECRET": os.environ["NOTION_INTEGRATION_SECRET"],

},

},

)

# Initialize Anthropic client and messages

client = anthropic.Anthropic()

messages = [

{

"role": "user",

"content": "Add the top 3 Hacker News posts to a new Notion page, Top HN Posts (today's date in YYYY-MM-DD), in my News page",

}

]

# Run agent loop

while True:

# Get the response from the model

response = client.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=1024,

messages=messages,

# Pass tools

tools=index.format_tools("anthropic"),

)

# Check if all blocks contain only text, which indicates task completion for this example

blocks = response.content

if all(block.type == "text" for block in blocks):

print(f"Assistant response: {blocks[0].text}\n")

break # End the agent loop

# Otherwise, process the response, which could include both text and tool use

for block in blocks:

if block.type == "text" and block.text:

print(f"Assistant response: {block.text}\n")

# Append the assistant's response as context

messages.append({"role": "assistant", "content": block.text})

elif block.type == "tool_use":

name = block.name

args = block.input

# Execute tool call

print(f"Executing tool call: {name}({args})\n")

output = index.execute(name, args)

print(f"Tool output: {output}\n")

# Append the assistant's tool call message as context

messages.append(

{

"role": "assistant",

"content": [

{

"type": "tool_use",

"id": block.id,

"name": block.name,

"input": block.input,

}

],

}

)

# Append the tool result message as context

messages.append(

{

"role": "user",

"content": [

{

"type": "tool_result",

"tool_use_id": block.id,

"content": str(output),

}

],

}

)

In the folder where you have this script, you can run the AI agent with the command:

python research-to-notion.py

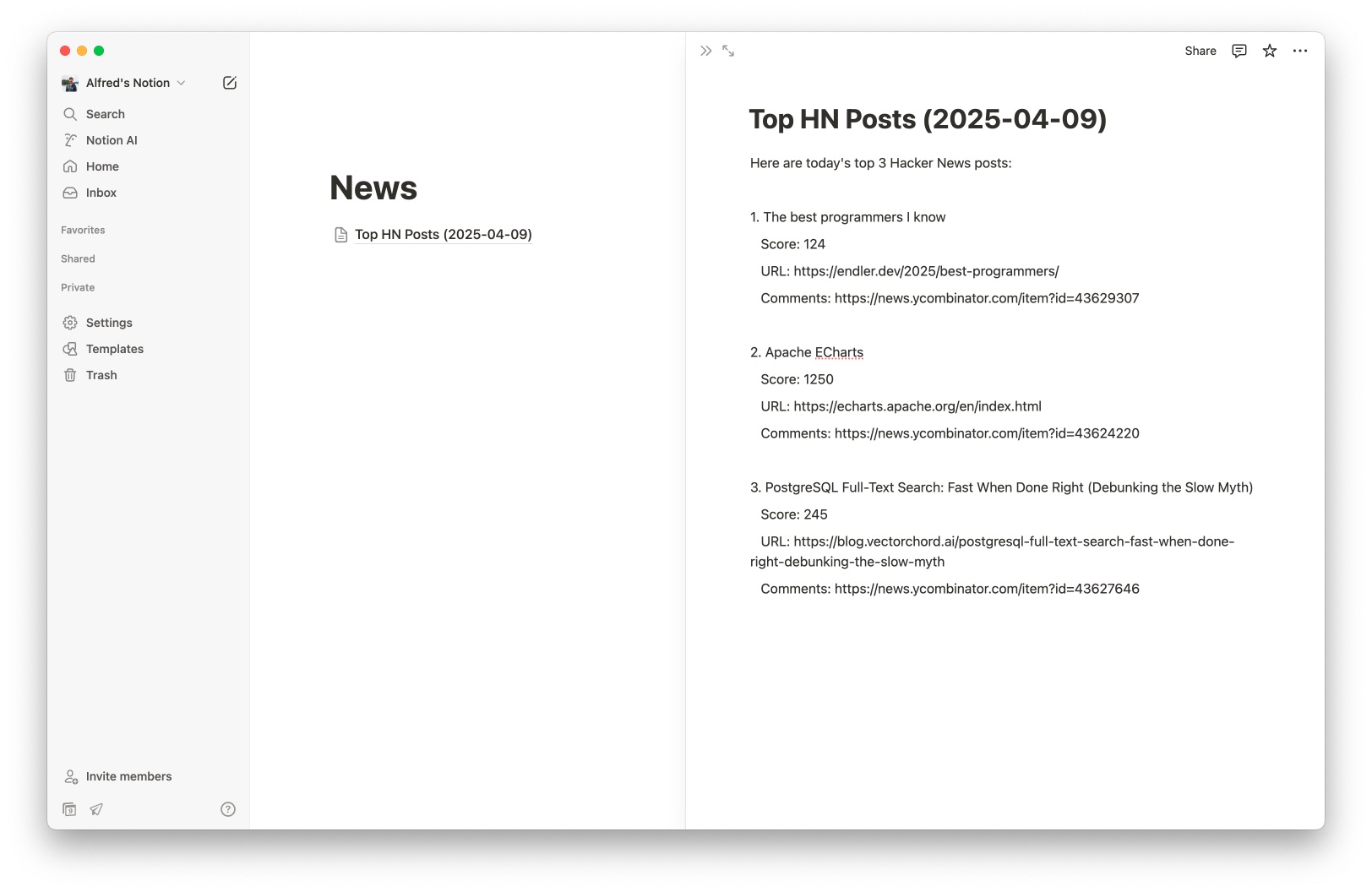

The AI agent will research Hacker News, find the "News" page, and add a new page with the top 3 posts.

To make this AI agent even more useful, you can set up a cron job to run this script every morning and get a daily digest waiting for you in your Notion.

If you have any questions, let us know on GitHub.